Generate an SQL Schema from a Migrations Directory

Question

How to generate an SQL schema file from an existing migrations directory to quickly set up a database for integration tests?

How to generate an SQL schema file from an existing migrations directory to quickly set up a database for integration tests?

How do I fix the ModifySchema is not allowed when migration plan is scoped to one schema error?

When I have the same schema deployed across multiple database servers, do I need to plan and approve migrations for each database separately?

How do I resolve MySQL Error 1049: Unknown database when connecting using a URL like: mysql://user:pass@host:port/<database_name>?

Error: mysql: query system variables: Error 1049 (42000): Unknown database '<database_name>'

What does this error mean, and how can I fix it?

How can I define functional indexes in MySQL using Atlas HCL syntax?

How do I configure Atlas to load the database schema from nested directories with HCL files?

/project

├── main.hcl

├── extensions

└── schemas

└── public

├── public.hcl

├── tables

│ ├── pets.hcl

│ ├── posts.hcl

│ ├── profiles.hcl

│ └── user_groups.hcl

├── functions/

└── types/

After upgrading Atlas, you might encounter an error stating "Feature X is no longer supported by this release."

For example:

Error: data.external_schema is no longer supported by this release.

This occurs when you install the community version of Atlas, which lacks some features available only in non-community builds.

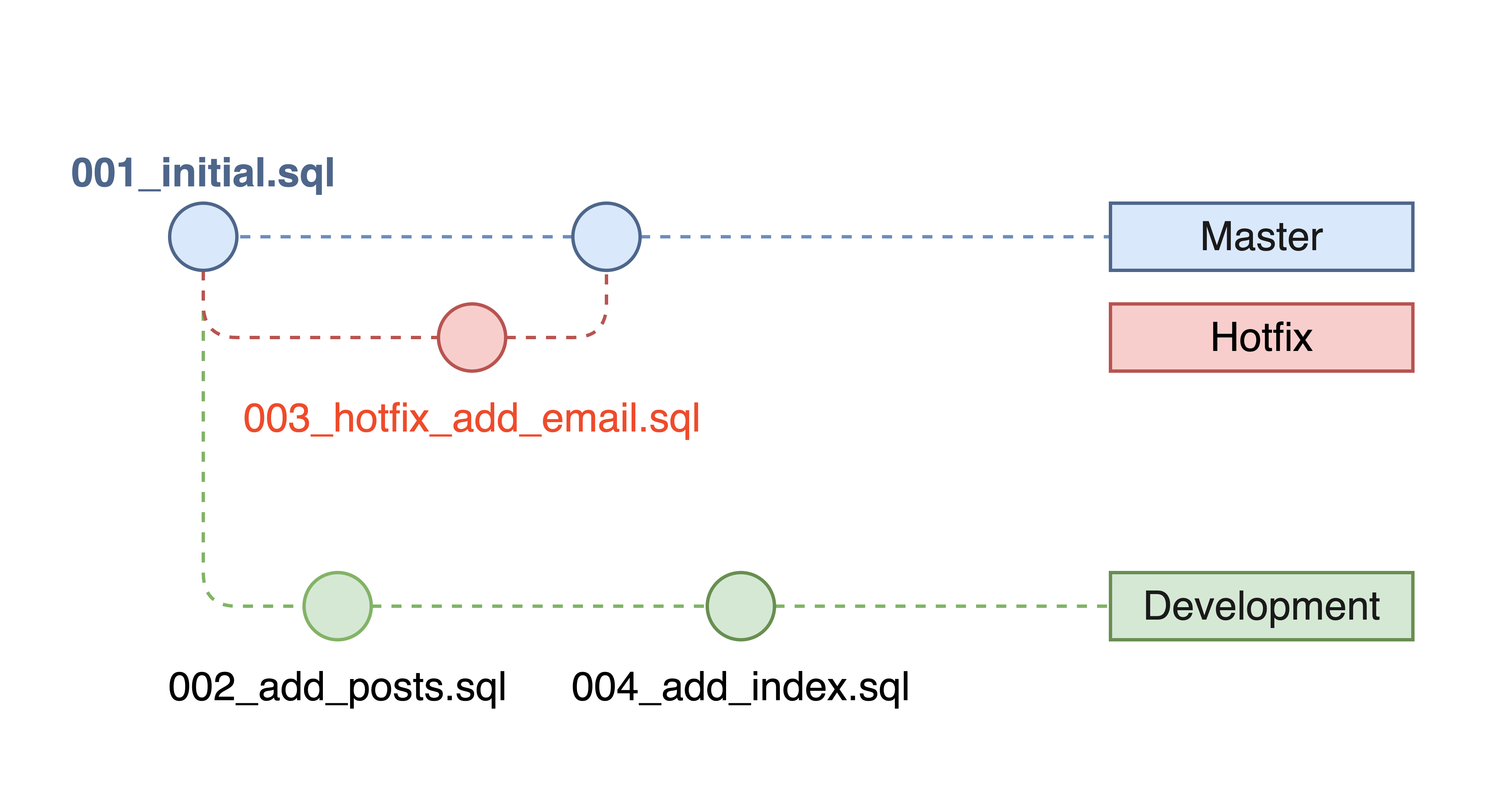

When working with multiple branches and applying hotfixes directly to production, out-of-order migration conflicts may occur if migration files are created with timestamps that don't reflect the actual merge order.

Consider this scenario:

Initial state:

001_initial.sql001_initial.sql002_add_posts.sql004_add_index.sqlAfter hotfix applied directly to production:

001_initial.sql003_hotfix_add_email.sql ← hotfix added001_initial.sql002_add_posts.sql004_add_index.sql

After merging master into dev - the problem:

001_initial.sql - Applied to production002_add_posts.sql - Dev-only, not applied to production003_hotfix_add_email.sql - Applied to production004_add_index.sql - Dev-only, not applied to productionThis creates a non-linear migration history where migration files 002 and 004 were created before and after the hotfix timestamp but haven't been applied to production.

How can I resolve the pq: out of shared memory error that appears while Atlas loads my schema?

How do I manage PostgreSQL extensions with Atlas?